The Circular Financing Pattern Everyone Sees

OpenAI's $20 billion figure represents annualized run rate—one month's revenue multiplied by twelve. Against the $1.4 trillion infrastructure commitment over eight years ($175 billion annually), this creates a 70-to-1 ratio of forward spending to current revenue—unprecedented in corporate history.

The circularity is documented and accelerating. Microsoft's October 29 earnings disclosed a $3.1 billion quarterly hit from OpenAI losses under equity method accounting. With Microsoft holding a 32.5% stake during Q1 FY2026 (the ownership percentage was later diluted to 27% after the October 28 restructuring), this implies OpenAI quarterly losses approaching $9.5 billion—an annualized burn rate exceeding $38 billion against reported annual revenue run rates around $20 billion. This represents a 492.7% year-over-year increase in losses compared to the $523 million impact in Q1 FY2025.

The ecosystem-wide pattern is clear: Microsoft invests $13 billion in OpenAI ($11.6 billion funded as of September 30, 2025). OpenAI commits massive capacity to Azure and other cloud providers. Hyperscalers report this AI demand to Wall Street, justifying combined 2025 capex of $366-370 billion (Amazon $125B, Alphabet $91-93B, Microsoft $80B, Meta $70-72B). The closed loop cannot generate sufficient external revenue unless measured against a vastly larger addressable market than enterprise software.

The Market Everyone Is Actually Targeting

The fundamental insight: OpenAI isn't selling software licenses at $20 per month. It's positioning to capture labor replacement at $100,000 per year in saved wages per knowledge worker.

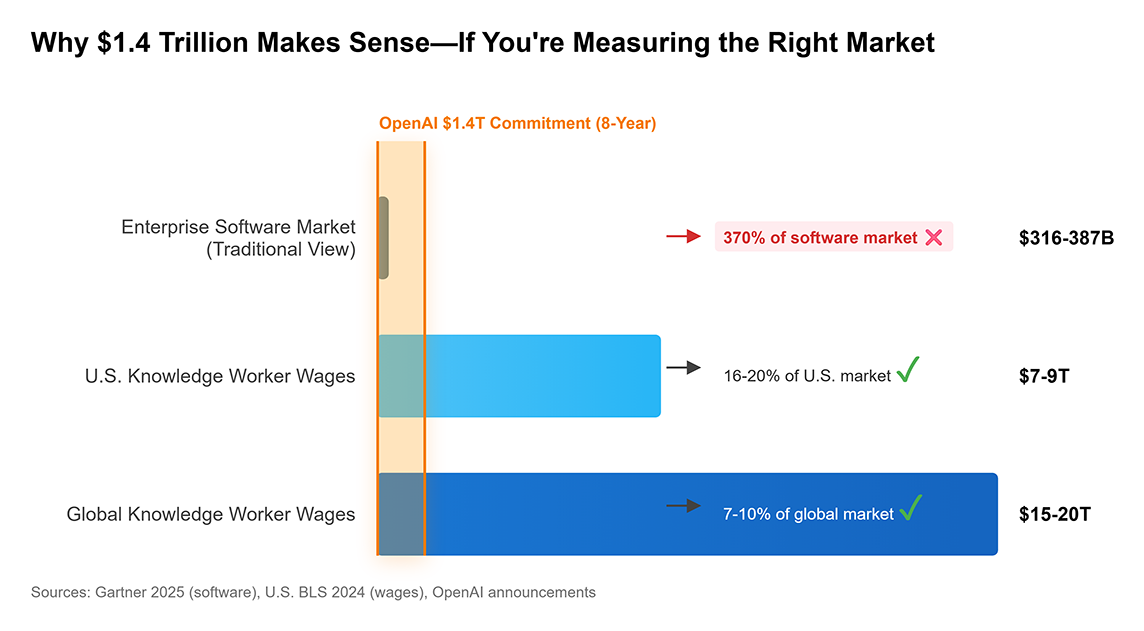

The actual addressable market isn't enterprise software budgets ($316-387 billion globally per Gartner/Statista 2025). It's the global wage bill for skilled labor. U.S. Bureau of Labor Statistics data shows total wages and salaries of approximately $11-12 trillion in 2024. Management, professional, and related occupations comprise roughly 42% of the workforce (71.5 million workers). If knowledge workers average $100,000+ in compensation, the U.S. knowledge worker wage market approaches $7-9 trillion. Globally, skilled labor compensation is conservatively estimated at $15-20 trillion.

This reframing is not speculative. Mechanize, a company founded in April 2025 by former OpenAI researcher Tamay Besiroglu, explicitly calculated its total addressable market as "$60 trillion per year" by aggregating global worker wages in its public launch announcement. Its stated mission is "the full automation of all work." This is not software-as-a-service; it's wage-capture-as-a-service.

The unit economics distinction is critical:

Productivity Tool Model: A $30/month Microsoft Copilot subscription sells to an existing employee who continues working. Annual value per user: $360.

Labor Replacement Model: An AI agent that replaces one $100,000 knowledge worker captures $100,000 in annual cost savings. This represents a 278-fold increase in economic capture per "customer."

This reframing transforms the $1.4 trillion investment from absurd to potentially rational:

Total Addressable Market: $15-20 trillion (global skilled labor, conservative)

Target Capture: 10% by 2035 = $1.5-2 trillion in annual wage displacement value

Gross Margins: 77% (Anthropic's 2028 projection)

Annual Gross Profit: $1.5T × 70% = $1.05 trillion

Annualized Infrastructure Cost: $1.4T ÷ 10 years = $140 billion

Return on Invested Capital: $1.05T ÷ $140B = 7.5x over infrastructure lifetime

This ROIC calculation works mathematically—if the 10% labor market capture occurs.

The Evidence Gap: Leading vs. Lagging Indicators

The critical weakness emerges when examining current data versus future projections. The $1.4 trillion bet assumes a discontinuous break from current trends. An analysis of the labor market shows the evidence is bifurcated—and the inflection may already be beginning for specific demographics.

On one hand, broad lagging indicators show stability. The Yale Budget Lab (October 2025) found that measures of AI exposure "show no sign of being related to changes in employment or unemployment" since ChatGPT's release. Enterprise AI adoption also remains low, ranging from 5-10% as of August 2025 according to the U.S. Census Bureau's Business Trends and Outlook Survey, depending on how "AI use" is defined.

On the other hand, high-frequency leading indicators show the inflection has already begun for a specific demographic: entry-level knowledge workers.

Stanford Digital Economy Lab (August 2025): In a report titled "Canaries in the Coal Mine?" researchers used high-frequency ADP payroll data to identify a "substantial decline" in employment for early-career workers (age 22-25) in AI-exposed occupations. By July 2025, employment for this group in roles like software development had fallen by 13% relative to less-exposed occupations since late 2022, while employment for older workers in the same jobs continued to grow. The data showed that for workers aged 22-25 in the highest AI-exposure quintile, employment declined 6% between late 2022 and July 2025, while employment for workers aged 35-49 in the same occupations grew by over 9%.

Federal Reserve Bank of St. Louis (August 2025): A report from the St. Louis Fed concluded that the U.S. "may be witnessing the early stages of AI-driven job displacement." Its analysis found that workers in AI-exposed fields like "math, computer, office and administrative functions" had "larger unemployment rate increases between 2022 and 2025."

The $1.4 trillion bet is not a blind fantasy. It is a massive wager that this leading-edge data will become the new macro trend, overpowering the lagging data by 2027-2030.

The Anthropic Test Case

The most important near-term validation comes from Anthropic's disclosed projections. According to November 4 reporting by The Information, Anthropic targets enterprise customers with disciplined focus on unit economics:

The 77% gross margin represents automation economics (minimal marginal cost), not SaaS economics (40-60% margins). This structure only makes sense if the "product" is digital labor replacing expensive human labor.

Anthropic's trajectory reveals stark contrast in the sector. The company is on track for $9 billion in annual recurring revenue by end of 2025, with targets of $20-26 billion ARR for 2026. API revenue alone is expected to hit $3.8 billion this year. With partnerships including Microsoft, Salesforce, Deloitte, and Cognizant deploying to hundreds of thousands of employees, Anthropic is targeting a $300-400 billion valuation in its next funding round.

Anthropic's 2027 cash flow milestone represents the single most important test of the entire labor replacement thesis. If the most disciplined, enterprise-focused player can't reach profitability despite claiming 77% margin potential, it confirms that even optimal execution cannot escape the productivity-tool ceiling.

The Power Infrastructure Catalyst

On October 23, 2025, U.S. Energy Secretary Chris Wright invoked Section 403 of the Department of Energy Organization Act to direct FERC to standardize and accelerate interconnections for large loads (≥20 MW), explicitly targeting AI data centers. This creates the first federal framework for expedited data center grid connections, potentially cutting interconnection timelines from 42-66 months to 18-30 months. Wright requested FERC take final action by April 30, 2026 in proceeding RM26-4-000. If enacted, this removes a primary physical bottleneck, creating asymmetric upside for the bull case by pulling forward the labor replacement inflection 18-24 months.

The Public Exchange (November 5-6, 2025)

The controversy unfolded over two days:

November 5: At a Wall Street Journal conference, OpenAI CFO Sarah Friar discussed financing the $1.4 trillion commitment, explicitly mentioning "the backstop, the guarantee, that allows the financing to happen" and referencing "an ecosystem of banks, private equity, maybe even governmental" partners.

November 6 (morning): David Sacks, Trump's AI czar, posted: "There will be no federal bailout for AI. If one frontier AI company fails in the U.S., another will replace it."

November 6 (afternoon): Sam Altman posted: "We don't have government guarantees for OpenAI data centers and we don't want them. We believe that governments should not pick winners and losers and that taxpayers should not bail out companies that make bad business decisions."

The conventional interpretation: Friar made a "gaffe" using the politically toxic word "backstop," Altman walked it back, and everyone agrees no company-specific bailouts should occur—only sector-wide support like the CHIPS Act.

The Buried Contradiction (October 27, 2025)

This interpretation collapses when confronted with a document published ten days before Friar's comment. On October 27, OpenAI's Chief Global Affairs Officer Christopher Lehane published an 11-page policy letter to the White House Office of Science and Technology Policy explicitly calling for:

This isn't broad industrial policy commentary. It's a specific request for the precise mechanisms Altman publicly disavowed on November 6. The October 27 letter uses nearly identical language to Friar's November 5 remarks—including the concept of government support that "allows the financing to happen."

AI researcher Gary Marcus, who published the October 27 letter publicly, called Altman's November 6 denial "lying his ass off," noting the letter explicitly requested the very loan guarantees Altman claimed not to want.

The Implication

This sequence reveals a dual-track strategy:

Track 1 (Public/Wall Street): "We are a free-market commercial entity with strong unit economics. We don't want or need government guarantees."

Track 2 (Private/Washington): "We are a strategic national asset in a geopolitical AI race with China. We require CHIPS Act-style industrial policy, including loan guarantees and tax credits."

If the 7.5x ROIC from labor market capture were a high-confidence internal forecast, OpenAI would not need—and would not be requesting—government support. The fact that OpenAI is actively lobbying for "loan guarantees," "grants," and "tax credits" while publicly rejecting them reveals fundamental lack of conviction in its own labor replacement thesis.

The Market's Dual Position

The most sophisticated capital reveals the same cognitive dissonance. On November 5, 2025, Financial Times reported that Deutsche Bank's risk management division is exploring hedges against its multi-billion dollar data center loan exposure: shorting AI-related stocks and purchasing default protection via Synthetic Risk Transfers. This occurs while Deutsche Bank's investment banking division continues profiting from financing the AI boom.

The Bank of England is examining lending practices to data centers as part of a review of financial exposure to AI. Bank of America estimates $49 billion in outstanding data center asset-backed securities. The scale of hedging activity across major financial institutions signals widespread concern despite public bullishness.

Simultaneously, a September 2025 Deutsche Bank research note argued that without Big Tech AI-related capex, "the US would be close to, or in, recession this year."

These aren't contradictory positions. They're perfectly rational two-level strategy:

Level 1 (Public/Sell-Side): Promote labor replacement narrative to fuel deal flow.

Level 2 (Private/Risk-Side): Execute systematic hedges against collapse to protect the balance sheet.

The smart money is simultaneously selling the boom and buying insurance against its failure—the clearest signal that even architects and financiers of the boom are hedging against their own thesis.

The Ternary Outcome

Financial markets face resolution on AI infrastructure economics through three potential futures:

World A: Software TAM Ceiling — AI remains productivity enhancement tool. Enterprise adoption plateaus around 30-40%. Revenue opportunity caps at $500 billion globally. The $1.4 trillion vastly exceeds addressable market. Circular financing concerns prove valid. Anthropic fails to reach cash flow positive by 2027. Restructuring and consolidation sweep the sector.

World B: Labor TAM Transformation — AI agents successfully replace 10-20% of knowledge worker tasks by 2030-2035. Revenue capture reaches $1-2 trillion from the $15-20 trillion skilled labor market. The $1.4 trillion infrastructure investment proves justified by 7.5x ROIC. Anthropic reaches cash flow positive by 2027 and validates 77% margins by 2028. DOE-FERC interconnection acceleration removes power bottlenecks by 2026-2027.

World C: K-Shaped Productivity Divergence — A hybrid outcome supported by emerging 2025 data. AI does not cause mass displacement but becomes a powerful productivity multiplier. Benefits concentrate among the top 5-10% of workers, who see 3-5x productivity gains, while entry-level and mid-tier workers face employment pressure. Stanford data shows this pattern already emerging: senior developers thriving while junior developers see 13-20% employment declines. This creates a smaller market ($600-800 billion) that justifies moderate infrastructure investment but falls short of the $1.4T commitment. Income inequality accelerates, creating political pressure for digital safety net policies by 2027-2028. The World Economic Forum's Future of Jobs Report 2025 projects that by 2030, 170 million jobs will be created while 92 million are displaced—a net gain of 78 million jobs. However, this aggregate masks the distributional impact: job creation concentrates in high-skill augmented roles and low-skill physical roles, while mid-tier knowledge work faces displacement.

The Five Critical Observables (2025-2027)

Resolution arrives through five near-term indicators that test fundamentally different dimensions of the thesis:

1. Frontier AI Lab Path to Profitability (2026-2027) — Tests whether any frontier AI lab can demonstrate sustainable unit economics. Watch Microsoft's quarterly equity method losses (OpenAI proxy) and Anthropic's 2027 cash flow milestone with margin progression (negative 94% → 50% → 77%). World A: losses accelerate, profitability targets missed. World B: burn stabilizes, Anthropic achieves cash flow positive validating the model works. World C: Anthropic reaches profitability but at lower margins and revenue than projected.

2. Enterprise Adoption & Commitment (2026) — Tests whether enterprises see incremental tool or transformative labor substitute. Watch CFO budget reclassification from IT/software to labor/digital workforce categories, pilot-to-production conversion rates, and Census Bureau adoption trajectory. World A: AI stays in capped IT budgets, pilot-to-production stalls at 20-30%, adoption plateaus <15%. World B: material budget shifts to labor categories, conversion exceeds 60%, adoption surpasses 25%. World C: adoption grows to 20-30% but remains classified as productivity tool, not labor replacement.

3. AI Pricing Power & Commoditization Pressure (2026) — Tests whether proprietary models can defend moats and pricing against open-source alternatives. Watch OpenAI/Anthropic API pricing trajectory versus open-source capability gaps and China's cost advantages. World A: forced price cuts, open-source catches up within 12 months, commoditization accelerates. World B: pricing power maintained, proprietary models sustain 12-18 month lead, customers pay premium. World C: pricing holds for high-end use cases, compresses for routine tasks.

4. Hyperscaler Capex Sustainability (2026) — Tests whether ecosystem funding continues regardless of near-term profitability. Watch Microsoft, Amazon, Google, Meta 2026 guidance and free cash flow versus capex commitments. World A: capex cuts, FCF pressure forces retrenchment, "discipline" rhetoric increases. World B: sustained or increased AI capex with strong FCF generation. World C: capex grows but at decelerating rate, focus shifts to ROI metrics.

5. U.S. Labor Market Data for AI-Exposed Occupations (2026) — Most direct real-world test of displacement thesis. Watch BLS unemployment and wage data for customer service, legal support, data entry, junior analyst roles. World A: no structural employment changes, wages grow normally, productivity gains not displacement. World B: rising unemployment in AI-exposed roles, wage compression, declining job postings. World C: employment pressure concentrates at entry-level (ages 22-25), senior roles grow, creating experience premium.

Investment Implications

World A Positioning: Short neo-clouds and AI-pure-play infrastructure with concentrated customer bases and high debt (CoreWeave, Lambda Labs, Applied Digital). Long hyperscalers with strong non-AI businesses (Microsoft Office/Azure, Google Search/Ads, Amazon retail/AWS). Long diversified data center REITs as relative value play. Avoid pure-play AI infrastructure without revenue diversification.

World B Positioning: Long hyperscaler AI infrastructure. Long power generation and data center real estate in favorable regulatory jurisdictions. Long semiconductor equipment and advanced packaging. Long companies with large proprietary datasets (financial services, healthcare, legal). Long neo-clouds if they survive to capture labor replacement economics.

World C Positioning: Long companies benefiting from productivity tools (Microsoft, Salesforce). Long upskilling/reskilling platforms. Short entry-level focused recruitment firms. Consider inequality-hedge assets. Focus on companies with strong senior talent retention.

Hedged Approach: Wait-and-see until 2027 Anthropic test and 2026 observable data accumulates. Consider pairs trades: long hyperscalers with diversified revenue, short pure-play AI infrastructure. Recognize asymmetric outcomes: World A creates concentration risk in AI-dependent plays, World B creates massive opportunity but timing uncertain, World C creates winners and losers within same sectors.

The Strategic Competition Override

Viewing AI infrastructure as strategic competition with China rather than commercial software investment changes the framework entirely. The United States did not reduce defense spending until winning the Cold War. If AI represents similar strategic competition—as the Trump administration's actions suggest—then commercial profitability timelines become secondary to maintaining technological advantage.

The OpenAI October 27 letter requesting "loan guarantees" isn't evidence of failed economics—it's deliberate strategic positioning. The company argues it should be treated as critical national infrastructure (like TSMC under CHIPS Act), not as commercial software vendor subject to pure market discipline.

The contradiction between the October 27 letter and November 6 statements reflects tension between these framings. OpenAI wants to be treated as strategic infrastructure (eligible for CHIPS-style support) while maintaining commercial unicorn valuation (no bailouts needed). This duality creates the documented contradictions—and reveals that even the architects privately hedge against their own thesis through government support requests (OpenAI) and derivative protection (Deutsche Bank).

In October 2025, the Bank of England's Financial Policy Committee warned that equity valuations look stretched, particularly for AI-focused technology companies, and that markets are vulnerable to a sharp correction if sentiment fades. The smart money's systematic hedging—documented in Deutsche Bank's internal deliberations and echoed across major financial institutions—confirms that conviction in the labor replacement thesis remains unproven even among those financing its pursuit.

OpenAI's November 6 disclosure of $1.4 trillion infrastructure commitments against $20 billion annualized run rate creates unprecedented 70-to-1 capital structure. Microsoft's October 29 equity method disclosure provides rare verifiable data on actual burn rates ($9.5 billion quarterly implied from 32.5% ownership stake, 492.7% year-over-year increase). The Friar-Sacks-Altman exchange (November 5-6) combined with the October 27 policy letter reveals systematic hedging through dual-track strategy—confirmed by AI researcher Gary Marcus documenting the contradiction. Labor TAM reframing ($15-20 trillion skilled wages) vs. software TAM ($316-387 billion) resolves apparent circular financing through 7.5x ROIC calculation—if 10% labor displacement occurs. However, current observable data (5-10% enterprise adoption, bifurcated employment effects with Stanford showing 13% decline for ages 22-25 but growth for senior workers) suggest World C K-shaped outcome may be most likely near-term path. Anthropic's 2027 cash flow target (progressing from negative 94% to 50% to 77% margins) and DOE-FERC interconnection acceleration (October 23, 2025 Section 403 directive, docket RM26-4-000) provide clear tests. Rated 9/10 vs. 10/10 because ultimate resolution depends on achieving large-scale labor displacement (2027-2030 timeline)—currently showing early signs in entry-level roles but not yet validated at economy-wide scale. World C scenario (productivity divergence without mass displacement) represents plausible middle path that neither fully validates nor invalidates the thesis, creating continued uncertainty through 2026-2027.

This commentary is provided for informational purposes only and does not constitute investment advice, an offer to sell, or a solicitation to buy any security. The information presented represents the opinions of The Stanley Laman Group as of the date of publication and is subject to change without notice.

The securities, strategies, and investment themes discussed may not be suitable for all investors. Investors should conduct their own research and due diligence and should seek the advice of a qualified investment advisor before making any investment decisions. The Stanley Laman Group and its affiliates may hold positions in securities mentioned in this commentary.

Past performance is not indicative of future results. All investments involve risk, including the potential loss of principal. Forward-looking statements, projections, and hypothetical scenarios are inherently uncertain and actual results may differ materially from expectations.

The information contained herein is believed to be accurate but is not guaranteed. Sources are cited where appropriate, but The Stanley Laman Group makes no representation as to the accuracy or completeness of third-party information.

This material may not be reproduced or distributed without the express written consent of The Stanley Laman Group.

© 2025 The Stanley-Laman Group, Ltd. All rights reserved.