The most significant development in quantum computing's Fall 2025 breakthrough period wasn't IBM's 1,121-qubit processor or Google's error correction milestone—achievements that dominated headlines and analyst reports. The underreported signal: neutral atom quantum computing emerged from relative obscurity to demonstrate superior scaling characteristics, achieve record-breaking error-corrected logical qubits, and attract strategic capital from institutions hedging across all competing hardware modalities.

Three well-funded companies—Atom Computing, QuEra, and Pasqal—achieved what superconducting systems struggle with fundamentally: physical qubit density approaching individual atoms separated by mere 4 microns, all-to-all connectivity allowing any qubit to interact with any other without expensive intermediary operations, and a clear path to scaling beyond 1,000 qubits without the wiring complexity that constrains competing approaches.

The physics advantages translate directly into engineering scalability. Individual atoms are identical by nature—unlike manufactured superconducting circuits with inherent fabrication variations that compound error rates. Atoms are positioned and controlled optically using "optical tweezers" (focused laser beams) rather than requiring individual wiring for each qubit, eliminating the physical wiring bottleneck that becomes prohibitive as superconducting systems scale from hundreds to thousands of qubits. Room-temperature optical components (versus dilution refrigerators at 15 millikelvin) reduce infrastructure complexity and operational costs by orders of magnitude.

Atom Computing's November 2024 partnership with Microsoft yielded the largest number of entangled logical qubits on record: 24, with 28 demonstrated including error correction capabilities. The company's second-generation systems scale up to 1,180 physical qubits using ytterbium-171 atoms and achieve 99.6% two-qubit gate fidelity—the highest for neutral atoms in commercial systems—with coherence times exceeding 40 seconds. For context, superconducting qubits maintain coherence for microseconds to milliseconds. While gate operation speeds lag superconducting qubits by 100-1000x, industry experts increasingly view this as irrelevant: as quantum computing transitions from noisy intermediate-scale quantum (NISQ) systems to fault-tolerant architectures, absolute gate speed matters far less than overall error-corrected operation fidelity.

Microsoft-Atom partnership roadmaps targeting scaled systems with increased logical qubit counts for commercial delivery through 2025-2026 represent the most aggressive near-term fault-tolerant deployment in the industry, building on the demonstrated 24-28 logical qubit foundation. This isn't academic research—it's commercial deployment with paying customers and service-level commitments through Azure Quantum.

QuEra's February 2025 $230 million raise from Google Ventures, SoftBank, and Valor Equity Partners—with NVIDIA's NVentures joining as expansion investor in September—reflects institutional conviction in neutral atoms' commercial trajectory rather than speculative venture capital chasing hype. The company's 256-qubit Aquila system is publicly accessible via Amazon Web Services and delivered as on-premise Gemini systems to Japan's National Institute of Advanced Industrial Science and Technology (AIST) and the UK's National Quantum Computing Centre. Geographic reach beyond U.S. markets—particularly Asian government research institutions—signals export viability and international competitive positioning.

Pasqal's June roadmap targeting 10,000 physical qubits and 200 logical qubits by 2030, combined with its November equity investment from LG Electronics, indicates Asian manufacturing giants are hedging beyond superconducting approaches dominated by Western players. LG's strategic investment suggests South Korean industrial policy views neutral atoms as a viable path to quantum computing leadership independent of IBM-Google technology stacks.

The November 2025 DARPA Quantum Benchmarking Initiative Stage B selections reveal U.S. government hedging strategy: two neutral atom companies (Atom Computing, QuEra) advanced alongside two trapped ion, two superconducting, four silicon spin, and one photonic company. DARPA's diversification—allocating up to $15 million per company for Stage B R&D baseline plans and potential $300 million per company for Stage C utility-scale construction by 2033—reflects genuine uncertainty about winning modalities. The neutral atom contingent's strength, particularly given the approach's relative obscurity until 2023, signals material competitive repositioning that public markets have not yet priced.

All-to-all connectivity represents neutral atoms' most profound architectural advantage over superconducting qubits. In superconducting systems, qubits can only directly interact with immediate neighbors in the physical layout. Connecting distant qubits requires expensive SWAP gate operations that introduce errors and consume computational resources.

Neutral atom systems enable any atom to interact with any other atom through carefully tuned laser pulses, dramatically improving logical qubit encoding efficiency. This architectural difference compounds as systems scale: a 1,000-qubit neutral atom array maintains full connectivity, while a 1,000-qubit superconducting system faces exponentially increasing overhead to route operations across the chip.

Atom Computing's demonstrated 28 logical qubits from approximately 1,180 physical qubits represents roughly 42:1 physical-to-logical ratio—2-3x better efficiency than surface code projections (typically 100:1 to 1,000:1) and positions neutral atoms competitively against IBM's quantum Low-Density Parity-Check (qLDPC) target ratios of 10:1 to 100:1. This efficiency advantage becomes decisive at scale: achieving 100 logical qubits might require 4,200 physical qubits for neutral atoms versus 10,000-100,000 for surface code implementations, dramatically reducing hardware complexity and cost.

The technology's emergence from academic research to commercial deployment occurred with remarkable velocity. The first neutral atom qubit demonstration dates to 2015-2016 academic papers. By 2021, startups began forming with serious venture backing. The 2024-2025 period delivered commercial systems, government contracts, and strategic partnerships with Microsoft, Amazon, and NVIDIA—a 3-4 year commercialization timeline suggesting the underlying physics matured faster than consensus anticipated.

Why did Wall Street miss this development? Media coverage concentrated on IBM and Google, whose decades of quantum investment dominate narratives. Neutral atom companies remained private longer than SPAC-listed competitors (IonQ, Rigetti, D-Wave went public 2021-2022), limiting analyst coverage. The technology's academic origins—university physics labs rather than corporate research divisions—meant less venture capital visibility until recent fundraising rounds.

The investment signal strengthened through Fall 2025 as neutral atom companies demonstrated commercial traction beyond pure research. QuEra's AWS integration provides cloud-accessible quantum computing at standard cloud rates—democratizing access beyond on-premise systems. Atom Computing's Microsoft partnership delivers quantum-as-a-service through Azure's enterprise customer base. Pasqal's hardware sales to government research institutions create reference customers critical for scaling.

NVIDIA's strategic investment across all three neutral atom leaders represents the most significant validation. NVIDIA profits regardless of which quantum modality wins—selling GPUs for hybrid quantum-classical workflows, error correction simulation, and algorithm development. The company's willingness to back neutral atoms alongside trapped ion (Quantinuum) and photonic (PsiQuantum) approaches signals confidence that multiple technologies will coexist in specialized applications rather than winner-take-all outcomes.

The competitive threat to IBM and Google's superconducting dominance is material but not immediate. Superconducting qubits maintain advantages in gate speed (nanoseconds versus microseconds) and decades of accumulated intellectual property, fabrication expertise, and ecosystem development. IBM's roadmap targeting 200 logical qubits by 2029 and Google's error correction breakthroughs demonstrate superconducting systems remain viable paths to fault-tolerant quantum computing. However, neutral atoms' superior scaling physics—particularly the wiring bottleneck elimination—positions the technology to potentially leapfrog superconducting approaches in the 2027-2030 period when systems attempt to scale from hundreds to thousands of logical qubits.

The broader strategic implication: quantum computing's future likely involves multiple coexisting hardware modalities, each optimized for specific application domains. Superconducting qubits may excel in environments requiring maximum gate speed despite operational complexity. Neutral atoms could dominate applications where scalability and operational simplicity matter more than raw speed. Trapped ions (IonQ, Quantinuum) offer highest fidelity for specialized calculations. Photonic systems (PsiQuantum) target applications requiring room-temperature operation and quantum networking.

For investors, the neutral atom breakthrough represents asymmetric opportunity with compressed timeline. The technology moved from academic curiosity to commercial deployment in under five years—faster than superconducting qubits' decades-long development cycle.

Current private market valuations remain below superconducting competitors despite comparable or superior technical achievements. QuEra's $230 million raise (estimated valuation $400-600 million) and Atom Computing's Microsoft partnership (estimated valuation $500 million-$1 billion) occurred at valuations substantially below Quantinuum's $10 billion or PsiQuantum's $7 billion, creating potential entry points before public market discovery drives valuation expansion.

The signal's strength derives from convergence of technical validation, commercial traction, government support, and strategic capital deployment—not isolated laboratory achievements or speculative roadmaps. Neutral atom quantum computing solved fundamental physics challenges (atom trapping, coherence, gate fidelity) while demonstrating a clear engineering path to industrial-scale manufacturing. This combination—physics validation plus scalable engineering—defines genuine technological inflection points that create investable opportunities before consensus recognition drives valuations to efficiency.

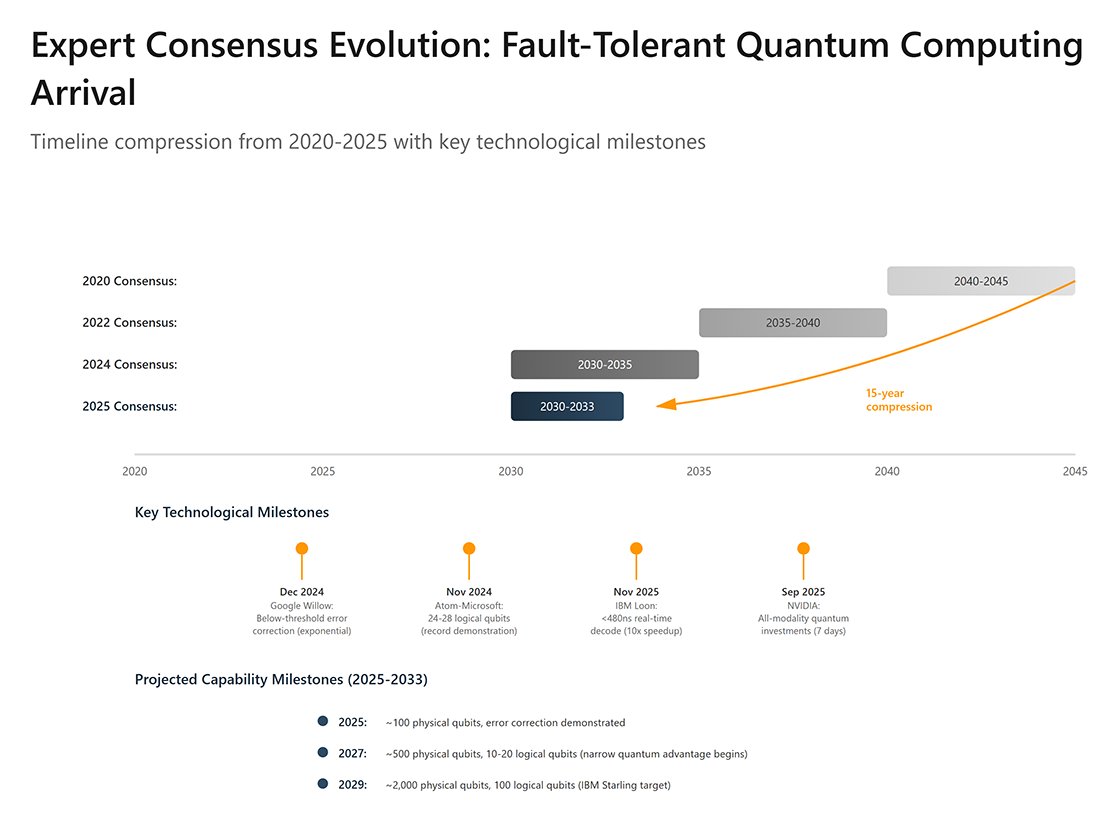

The technical foundation enabling neutral atom quantum computing's commercial viability—and validating the compressed 2030-2033 timeline—is the achievement of below-threshold quantum error correction across multiple independent research groups and hardware modalities. This represents the transition from theoretical possibility to empirical reality, solving the fundamental challenge that has constrained quantum computing since the field's inception.

Quantum error correction (QEC) involves encoding a single "logical qubit" across multiple entangled physical qubits. By redundantly storing information and constantly monitoring for errors, the system detects and corrects faults without collapsing the quantum state—a requirement that sounds paradoxical (how do you measure without destroying the quantum information?) but is achievable through clever encoding schemes.

The theory has existed since the 1990s. The implementation proved immensely difficult because QEC only provides net benefit if the physical error rate falls below a certain threshold. Otherwise, the process of correction introduces more errors than it fixes. For three decades, experimental systems operated above this threshold—adding more qubits made systems noisier and less reliable, not more capable.

Google's December 2024 Willow chip demonstration marked the watershed: For the first time in quantum computing history, a system achieved exponential error suppression as it scaled. Using surface codes (the leading QEC architecture), Google created logical qubits from lattices of physical qubits of increasing size—3×3, 5×5, and 7×7 arrays. As the team increased lattice size, the logical error rate decreased exponentially rather than increased, with a suppression factor of 2.14× at each step. The logical qubit in the 7×7 array lived 2.4× longer than the best physical qubit in the system.

This validates that the system operates "below threshold"—the critical point where error correction helps more than its own complexity hurts. Google's demonstration provides the first empirical validation that the physical theory of fault-tolerant quantum computing is achievable, not just mathematically elegant.

IBM's November 2025 announcement accelerated the timeline beyond Google's proof-of-concept: The company's Loon processor achieved real-time error correction in under 480 nanoseconds using AMD FPGAs—a 10× speedup over previous approaches and delivered one year ahead of IBM's public roadmap. This matters because real-time decoding—the classical computation required to interpret error measurements and determine necessary corrections—must complete faster than the qubits decohere. IBM's speedup demonstrates that the "decoding bottleneck" can be solved with existing hardware rather than requiring exotic new technologies.

The company's quantum Low-Density Parity-Check (qLDPC) codes reduce error correction overhead by approximately 90% versus surface codes that dominated the field for two decades. Overhead—the ratio of physical qubits required per logical qubit—represents the critical economic metric determining when quantum computers become cost-competitive with classical systems for specific applications. Surface codes typically require 100:1 to 1,000:1 physical-to-logical ratios. IBM's qLDPC codes target 10:1 to 100:1 ratios, potentially reducing the physical qubit requirements for a useful quantum computer by an order of magnitude.

Harvard-MIT researchers using QuEra's neutral atom system demonstrated the first architecture combining all essential elements for scalable error correction with errors suppressed below the critical threshold. The team demonstrated dozens of error correction layers and achieved 3,000+ qubits running continuously for over two hours—previous best performance was 13 seconds from a Caltech 6,100-qubit array. The Harvard system uses an optical lattice "conveyor belt" to inject 300,000 atoms per second, overcoming the "atom loss" problem that forced previous experiments to pause, reload, and restart. In theory, the system could run indefinitely.

These three independent validations—Google's superconducting qubits, IBM's optimized error correction codes, and Harvard/QuEra's neutral atom continuous operation—collectively prove that below-threshold quantum error correction is achievable across multiple hardware modalities. The convergence of independent approaches crossing the threshold within months of each other signals that the field reached a fundamental inflection point rather than isolated laboratory achievements.

The implications for commercial timelines are direct: The path from current ~10-50 logical qubits to the ~100 logical qubits needed for meaningful quantum advantage is now an engineering challenge rather than a physics problem. IBM's roadmap targeting 200 logical qubits by 2029 (Starling system) and over 1,000 logical qubits in subsequent systems (Blue Jay) represents credible engineering extrapolation rather than speculative science fiction.

Breaking RSA-2048 encryption—the benchmark for cryptographically-relevant quantum computers (CRQC)—requires approximately 4,000 logical qubits with high-fidelity operations sustained for hours. At current roadmap trajectories, this capability arrives 2033-2038 for leading companies. However, Craig Gidney's May 2025 algorithmic improvements reduced quantum resources needed by 20×—from 20 million physical qubits taking 8 hours to under 1 million noisy qubits completing the task in 5 days. At DEF CON 33 in August 2025, cybersecurity researcher Konstantinos Karagiannis presented evidence that "the vague prediction of 10-20 years has already collapsed," asserting a "high possibility ciphers such as RSA and ECC will be broken around 2030."

This timeline compression—from "maybe 2040" to "likely 2030-2033"—represents the most underreported national security development of 2025. Current encrypted data faces "harvest now, decrypt later" (HNDL) attacks where adversaries collect encrypted communications today for decryption once quantum computers become available. Data requiring protection beyond 2030—classified government communications, long-term trade secrets, financial records, healthcare information—must transition to post-quantum cryptography before adversaries finish collection efforts already underway.

The U.S. National Security Memorandum 10 mandates full federal migration to post-quantum cryptography by 2035, while the NSA requires National Security Systems to adopt quantum-resistant algorithms between 2030-2033. President Trump's June 2025 Executive Order rolled back Biden Administration January mandates, eliminating hard deadlines and extending timelines—creating breathing room that experts warn risks postponing critical upgrades precisely when urgency is greatest.

For pharmaceutical and materials science applications, the timeline extends further. Complex molecules relevant to drug discovery—cytochrome P450 or FeMoco (the enzyme enabling nitrogen fixation)—would require 100,000 to 2.7 million qubits. Current systems offer 36-256 qubits in neutral atom arrays or 100-400 in superconducting processors, suggesting pharmaceutical applications requiring accurate molecular simulation won't achieve quantum advantage until mid-2030s at earliest.

However, hybrid quantum-classical workflows demonstrate incremental value sooner. IonQ and Ansys's March 2025 demonstration achieved 12% speedup for medical device blood flow simulation—genuine but modest advantage unlikely to justify quantum computing costs for most firms. HSBC's September 2025 deployment using IBM's Heron processor reported 34% improvement in bond trading prediction accuracy, though quantum theorist Scott Aaronson noted performance diminished during blinding windows, suggesting potential data leakage rather than genuine advantage.

The honest industry assessment comes from Quantinuum Head of Strategy Chad Edwards: "We are currently using chemistry problems to advance quantum computing instead of using quantum computing to advance chemistry. But there will be a tipping point." This captures the current state precisely: quantum computing advances through pharmaceutical and materials science benchmarking, but hasn't crossed into utility for actual commercial applications requiring accuracy and scale beyond laboratory demonstrations.

The critical distinction: quantum computers achieving narrow advantage on specific tasks (optimization, simulation of small molecules, certain machine learning subroutines) will arrive 2027-2030 as systems reach ~100 logical qubits. Transformative applications delivering economic value exceeding development costs require 1,000-10,000 logical qubits and likely won't materialize until the early-to-mid 2030s. The gap between "narrow advantage" and "transformative impact" represents years of engineering scale-up, algorithm optimization, and commercial workflow integration—work that occurs after the physics problems are solved but before the technology reshapes industries.

These breakthroughs in error correction and neutral atom scalability deserve recognition—but market enthusiasm has raced ahead of technical reality in predictable ways. Three narratives require investor skepticism to separate genuine progress from marketing theater.

The most egregious example of marketing overtaking science in quantum computing's 2025 developments: Microsoft's February announcement claiming its Majorana 1 chip was the "world's first QPU powered by topological qubits" with 8 qubits offering inherent error protection and a path to millions of qubits on a single chip. The claim unraveled within weeks under scientific scrutiny, revealing the gap between corporate communications and peer-reviewed physics.

Nature's editorial clarification directly contradicted Microsoft's press narrative: the results "do not represent evidence for topological modes, but the research offers a platform for manipulating such modes in the future." Professor George Booth of King's College London noted Microsoft has "pioneered the idea... but so far have failed to demonstrate working devices while competitors have been building basic quantum computers... managed to build roughly one half of one qubit."

The scientific community's skepticism intensified at the March 2025 APS Global Summit, where physicist Chetan Nayak's presentation left "many physicists expressing doubts about the claim" according to contemporaneous reporting. The doubts weren't merely academic quibbling—they reflected fundamental questions about whether Microsoft had demonstrated topological qubits at all versus conventional semiconductor structures exhibiting superficially similar behavior.

Microsoft's history compounds credibility concerns. In 2018, the company retracted a topological qubit paper from Nature after scientific flaws were identified, setting back the entire research direction by years and damaging the field's reputation. Earlier allegations surfaced in 2017 regarding potential data manipulation in Nature Communications publications, further undermining trust in Microsoft's quantum claims. This pattern—bold announcements followed by scientific pushback and retractions—distinguishes Microsoft from competitors whose claims generally withstand peer review.

Microsoft's hedge—partnering with Atom Computing for near-term deliverables while pursuing long-shot topological approaches—reveals the company's own uncertainty about its technology pathway. The integrated system targeting scaled logical qubit counts for 2025-2026 commercial delivery relies entirely on Atom Computing's neutral atom hardware, not Microsoft's topological research. This partnership represents pragmatic acknowledgment that topological qubits remain theoretical rather than engineering-ready.

For investors, Microsoft's quantum efforts warrant extreme caution. The company's Azure Quantum platform provides value as cloud infrastructure and software development tools—essentially reselling access to third-party quantum hardware from IonQ, Quantinuum, and Atom Computing. But Microsoft's proprietary hardware program shows minimal progress despite billions in R&D investment over 15+ years. Topological qubit timelines should be viewed as 10+ years if feasible at all, making Microsoft's quantum division materially less credible than competitors with demonstrated functional hardware.

The broader pattern: topological qubits represent the "nuclear fusion" of quantum computing—perpetually promising, theoretically elegant, but practically elusive despite decades of research. The approach offers potential advantages (inherent error protection through exotic quasiparticles called Majorana fermions) but requires materials and fabrication techniques that remain unproven at commercial scale. Microsoft's February announcement exemplifies the danger of conflating research progress with commercial readiness, particularly when corporate communications departments amplify preliminary results beyond what scientific evidence supports.

Two pervasive narratives mislead investors about quantum computing's near-term capabilities: raw qubit counts as progress indicators and AI-quantum synergy claims. Both represent measurement theater obscuring genuine technical requirements.

The Qubit Count Trap: IBM Research's warning is direct: "A million qubits won't bring you an inch closer to building a fully-functional quantum computer" without error correction. Physical qubit counts across modalities are fundamentally incomparable. Superconducting systems require 100:1 to 1,000:1 physical-to-logical ratios using surface codes. Neutral atoms achieved 28 logical qubits from 1,180 physical qubits—roughly 42:1 efficiency. IBM's 1,121-qubit Condor processor exemplifies the fallacy: the company immediately pivoted to emphasizing its 133-qubit Heron processor with superior error rates, implicitly acknowledging the 1,000-qubit milestone was public relations rather than technical breakthrough.

The mathematics are unforgiving. State-of-art 99.9% two-qubit fidelity means 1 in 1,000 operations fail. A 10,000-step algorithm has 99.995% probability of encountering errors; at 100,000 steps, error-free execution becomes essentially impossible without fault-tolerance. Only logical qubits—error-corrected units sustaining extended computations—enable applications justifying quantum computing's development costs. Industry convergence on ~100 logical qubits by 2030 as the meaningful milestone reflects growing sophistication. Alternative metrics emerging include Quantum Volume, Algorithmic Qubits (#AQ), and Quantum Operations per Second (QuOps).

The AI Acceleration Myth: Claims that quantum computing will accelerate AI training, optimization, or inference represent aspirational thinking disconnected from technical reality. Google's November 2025 quantum advantage framework admits "few algorithms have advanced to Stage III—establishing quantum advantage in a real-world application. This is the most significant bottleneck." The fundamental misunderstanding: quantum computers accelerate only specific mathematical problems where quantum mechanics provides inherent advantages. Training neural networks involves extensive linear algebra—matrix multiplication, gradient descent, backpropagation—operations where quantum computing offers no known speedup over GPUs optimized through decades of development.

Data input/output represents an insurmountable barrier. Quantum computers require data encoded in quantum states—a non-trivial process potentially consuming more time than any speedup provides. Loading a 175-billion parameter language model would require quantum state preparation orders of magnitude beyond current capabilities. While NVIDIA's DGX Quantum achieves sub-4-microsecond GPU-QPU latency, no production AI systems demonstrably benefit from quantum acceleration.

The relationship is complementary, not synergistic. AI techniques prove essential for quantum error correction (reinforcement learning optimizing control pulses) and hardware calibration (machine learning identifying optimal qubit parameters). Quantum computing targets quantum mechanical simulation, certain optimization tasks, and cryptography—domains where AI offers no advantage regardless of computational resources. The timeline compression from "2040" to "2030-2033" stems from error correction breakthroughs and neutral atom scaling, not AI integration.

For investors: Evaluate companies on logical qubit roadmaps, error rates, and gate fidelities—not headline physical counts or AI synergy claims. The overlap between quantum and AI represents narrow research frontier unlikely generating material revenue until mid-to-late 2030s when fault-tolerant systems with thousands of logical qubits become available.

The quantum computing sector presents asymmetric risk-return profiles requiring company-level analysis rather than sector-wide enthusiasm. Public market quantum stocks—IonQ, Rigetti, D-Wave, Quantum Computing Inc.—trade at price-to-sales ratios exceeding 30×, well above historical bubble thresholds, despite generating combined revenues under $150 million annually.

Over the trailing 12 months through November 2025, these four companies experienced stock gains of 260% to 3,060%, followed by corrections of 24-50% from October peaks after Q3 earnings disappointed.

Insider activity reveals troubling patterns: $749 million in net insider selling across the four public quantum companies versus minimal buying in the trailing 12 months through Q3 2025 (one IonQ purchase of $2 million in March 2025). IonQ executives sold $446.5 million, D-Wave insiders sold $224 million, Rigetti management sold $45.6 million, and Quantum Computing Inc. insiders sold $33.2 million net.

This selling occurred while stock prices traded at or near all-time highs, suggesting executives view current valuations as unsustainable relative to near-term commercial prospects.

The pattern resembles early internet stocks circa 1999-2000 or SPACs in 2021—genuine technological revolution with premature valuations disconnected from near-term fundamentals. Historical precedent supports caution: every major technology innovation experienced early bubble-bursting events before eventual commercial success.

The internet bubble crashed 80% from 2000-2002 before Amazon, Google, and others emerged as dominant platforms. Cleantech stocks collapsed 2008-2010 despite solar and batteries eventually becoming massive industries. SPACs crashed 2021-2022 with many falling 90%+ despite some underlying businesses having legitimate prospects. Amazon fell 95% from its 2000 peak before becoming the defining e-commerce platform.

Analyst perspectives range from bullish to alarmed. While some project 50%+ upside, Morgan Stanley's $32 IonQ price target implies 47% downside from mid-November levels, and Cantor Fitzgerald's $20 D-Wave target suggests 50%+ potential decline. Multiple analysts emphasize quantum systems remain "four years from solving practical problems faster than classical computers," noting that even with triple-digit revenue growth, stocks would need to trade sideways 5-10 years to justify current valuations through organic earnings growth.

The most prudent public market strategy: expect 50%+ corrections in 2026-2027 before longer-term growth resumes. Patient capital willing to wait through bubble-bursting events and enter after 50-70% corrections from current levels may find attractive entry points as commercial timelines clarify and revenue trajectories materialize. Near-term volatility will be extreme, with potential for additional 30-50% declines beyond initial corrections if CRQC timelines slip or technical roadblocks emerge.

For sophisticated investors seeking quantum exposure without bubble risk, NVIDIA represents the most de-risked positioning. The company profits regardless of which quantum modality wins through investments across trapped ion (Quantinuum), photonic (PsiQuantum), and neutral atom (QuEra) approaches. NVIDIA extracts value throughout the hybrid quantum-classical workflow through NVQLink interconnects (coupling quantum processing units to GPU supercomputers with sub-microsecond latency), CUDA-Q development environment (enabling quantum algorithm development), and GPU-based error correction simulation and calibration.

NVIDIA's quantum positioning mirrors its historical pattern: providing infrastructure (GPUs for AI, cryptocurrency mining, autonomous vehicles) rather than building end applications. The company's $500 billion GPU order backlog over the next five quarters and dominant AI position provide downside protection, while quantum represents asymmetric upside optionality. Even if quantum computing development encounters delays, NVIDIA's core business remains insulated. If quantum computing delivers on compressed timelines, NVIDIA captures infrastructure layer value across all successful modalities.

Private market quantum investments concentrate in late-stage companies with credible paths to commercial products. PsiQuantum ($1 billion Series E at $7 billion valuation) and Quantinuum ($600 million at $10 billion pre-money valuation) captured approximately 50% of 2025 quantum investment, suggesting market consolidation around perceived winners rather than broad ecosystem expansion. This concentration increases execution risk: PsiQuantum's photonic approach represents an "all-or-nothing" bet on solving photon loss at unprecedented scale, while Quantinuum's trapped-ion systems face known scaling challenges requiring networking multiple ion trap modules.

Neutral atom quantum computing companies—Atom Computing, QuEra, Pasqal—present the most compelling risk-reward for sophisticated investors given underappreciation relative to technical progress. The modality's advantages (atomic uniformity, all-to-all connectivity, room-temperature components, clear scaling path) address superconducting systems' fundamental limitations, while government selections (DARPA Quantum Benchmarking Initiative Stage B for Atom Computing and QuEra) and strategic partnerships (Microsoft-Atom, LG Electronics-Pasqal) validate commercial trajectories.

These remain private companies requiring accredited investor access, though QuEra and Pasqal contemplate public listings (QuEra potentially via SPAC combination, Pasqal targeting late 2025 European listing). Current estimated private market valuations remain substantially below superconducting competitors despite comparable or superior technical achievements:

For comparison: Quantinuum ($10 billion), PsiQuantum ($7 billion), IonQ (public, $7+ billion market cap at peaks). The 5-20× valuation discount for neutral atom companies versus superconducting/photonic competitors creates potential entry points before public market discovery drives convergence.

Government contractors selected for DARPA Quantum Benchmarking Initiative Stage B—11 companies including Quantinuum, IonQ, Rigetti, Atom Computing, QuEra, Nord Quantique, D-Wave—benefit from non-dilutive funding up to $15 million for Stage B R&D baseline plans and potential $300 million per company for Stage C utility-scale construction by 2033. This de-risks technology development through government support while maintaining commercial upside, though government contracts often come with lower margins and intellectual property constraints limiting future commercial flexibility.

The strategic imperative: quantum-readiness despite uncertainty. Organizations should prioritize post-quantum cryptography migration immediately despite quantum computing's 5-8 year timeline to CRQC capability. The harvest-now-decrypt-later threat is active today, with documented traffic redirection incidents and federal government recognition of urgency. Data requiring protection beyond 2030—classified communications, financial records, trade secrets, healthcare information, critical infrastructure data—must transition to quantum-resistant encryption before adversaries finish collection efforts already underway.

President Trump's June 2025 Executive Order rolling back Biden Administration PQC mandates creates dangerous complacency. Responsible organizations will implement hybrid classical-PQC cryptography over 2025-2027 regardless of federal requirements, recognizing that the window for protecting long-lived sensitive data closes before quantum computers achieve full decryption capability.

Building quantum literacy justifies investment today even without immediate applications. The 3:1 job-to-candidate ratio for quantum professionals and 180% growth in quantum job listings (2020-2024) suggest workforce bottlenecks will constrain adoption as hardware matures. Organizations establishing quantum research collaborations and training technical staff position for the 2027-2030 transition when quantum advantage emerges for narrow commercial applications.

The pharmaceutical, financial services, logistics, and materials science sectors face highest potential impact and should maintain active quantum research programs while recognizing production deployment remains 3-7 years away. Early pilot programs establish institutional knowledge and identify which problems might benefit from quantum approaches, though expectations should remain calibrated: most pharmaceutical and materials science applications require 100,000+ qubits (current systems: 36-1,180), suggesting late 2020s to early 2030s before transformative impact.

The investment thesis: quantum computing reached its inflection point in Fall 2025 through simultaneous breakthroughs in error correction physics and neutral atom hardware scalability. Timeline compression from "maybe 2040" to "likely 2030-2033" for cryptographically-relevant systems and "2027-2030" for narrow commercial advantages creates asymmetric opportunities for investors distinguishing material technical progress from marketing narratives and tolerating extreme near-term volatility for longer-term structural positioning.

The race to useful quantum computing is entering its most critical phase, where hardware scaling meets algorithm development and commercial demand. Winners will be determined by achieving highest logical qubit counts with lowest error rates at commercially viable operational costs. Neutral atom quantum computing's emergence as the dark horse competitive threat exemplifies how the field's dynamics remain fluid, with fundamental assumptions subject to disruption by breakthrough physics and engineering innovations.

For the sophisticated investor, the recommendation is clear: avoid public quantum stocks at current valuations, build exposure through diversified infrastructure plays like NVIDIA, seek private market access to neutral atom companies at valuations predating public market discovery, and maintain quantum literacy through research partnerships. Those who ride the wave of quantum computing's transition from NISQ-era speculation to fault-tolerant commercial reality could find themselves positioned at the technological inflection point defining the next decade of strategic competition and economic value creation.For sophisticated investors evaluating quantum exposure, the most defensible positioning appears to be: avoiding public quantum stocks at current valuations, building exposure through diversified infrastructure plays like NVIDIA, seeking private market access to neutral atom companies at valuations predating public market discovery, and maintaining quantum literacy through research partnerships. Those who successfully navigate quantum computing's transition from NISQ-era speculation to fault-tolerant commercial reality could find themselves positioned at the technological inflection point defining the next decade of strategic competition and economic value creation.

Neutral atom quantum computing's emergence represents material competitive repositioning validated through multiple independent signals: record-breaking error-corrected logical qubits (24 entangled, 28 demonstrated by Atom Computing-Microsoft), strategic capital deployment ($230 million QuEra raise from Google Ventures/SoftBank with NVIDIA expansion investment, estimated valuations $400M-$1B versus superconducting competitors at $7-10B+), government validation (DARPA Stage B selections for Atom Computing and QuEra), and commercial traction (Microsoft Azure Quantum partnership, AWS Braket integration, international hardware sales to Japan AIST and UK National Quantum Computing Centre).

The technology's superior scaling physics—atomic uniformity eliminating fabrication variation, all-to-all connectivity avoiding SWAP gate overhead, room-temperature optical components reducing operational complexity, and demonstrated 42:1 physical-to-logical qubit ratio (2-3× better than surface code projections)—addresses fundamental limitations constraining superconducting approaches. The 2015-2025 commercialization velocity (academic demonstration to enterprise deployment in under 10 years) exceeds superconducting qubits' multi-decade development cycle while achieving comparable or superior technical milestones.

However, the signal stops short of 9-10 because neutral atoms face known challenges: gate operation speeds lag superconducting qubits by 100-1000×, laser control complexity increases as systems scale beyond current 1,180-qubit demonstrations, and atom loading/loss rates require continuous replenishment systems unproven at industrial scale. The technology's commercial deployment timeline (2027-2030 for meaningful advantage) depends on solving these engineering challenges while competing against superconducting systems benefiting from decades of ecosystem development, intellectual property accumulation, and fabrication expertise.

The broader quantum computing inflection—error correction threshold crossing, timeline compression to 2030-2033, government funding escalation—receives 9.0 signal strength as a field-wide development. Neutral atoms specifically receive 8.5, reflecting high conviction tempered by execution risk and incumbent competitive positioning. The combination creates asymmetric opportunity: underappreciated technology with superior physics attacking established players, but requiring successful scaling and ecosystem development to realize potential. For patient capital with 5-7 year investment horizons, the risk-reward profile justifies allocation despite near-term uncertainty.

This commentary is provided for informational purposes only and does not constitute investment advice, an offer to sell, or a solicitation to buy any security. The information presented represents the opinions of The Stanley Laman Group as of the date of publication and is subject to change without notice.

The securities, strategies, and investment themes discussed may not be suitable for all investors. Investors should conduct their own research and due diligence and should seek the advice of a qualified investment advisor before making any investment decisions. The Stanley Laman Group and its affiliates may hold positions in securities mentioned in this commentary.

Past performance is not indicative of future results. All investments involve risk, including the potential loss of principal. Forward-looking statements, projections, and hypothetical scenarios are inherently uncertain and actual results may differ materially from expectations.

The information contained herein is believed to be accurate but is not guaranteed. Sources are cited where appropriate, but The Stanley Laman Group makes no representation as to the accuracy or completeness of third-party information.

This material may not be reproduced or distributed without the express written consent of The Stanley Laman Group.

© 2025 The Stanley-Laman Group, Ltd. All rights reserved.